Cluster Components

Overview of the ACP components and building blocks.

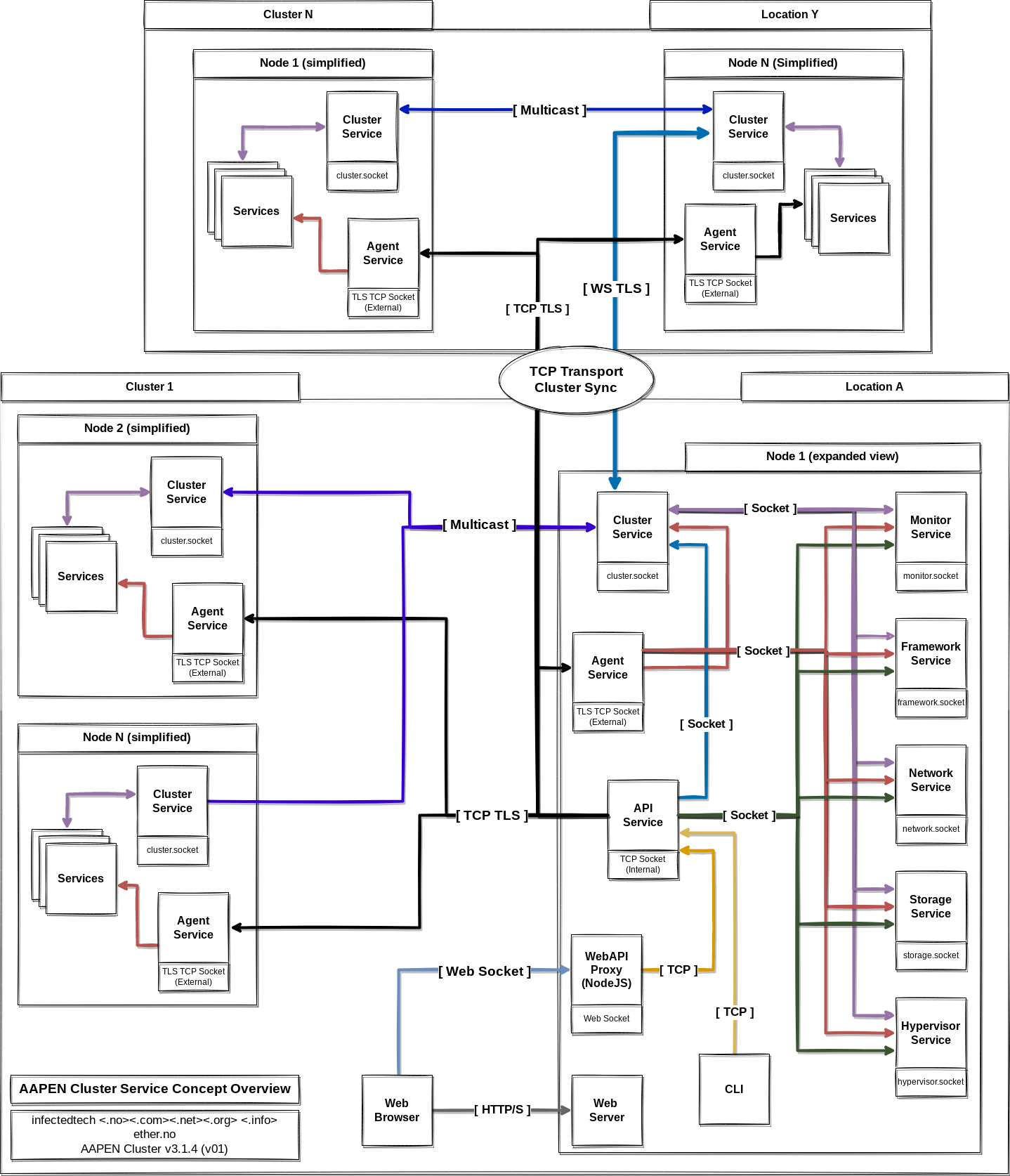

Cluster Communications

The following section gives an overview of the communications concepts used in the cluster. Primarily the communications channels may be considered either internal, external, as well as either intra-cluster or extra-cluster.

External

External communications refers to services providing external services or entry-points for a node.

- [Agent]

The agent provides a TLS TCP based entrypoint for a node. The socket is based on OpenSSL and can utilize certificate based authentication (SSL_VERIFY_PEER), as well as a simple API key used for authorization. The Agent acts as a proxy for external requests, most commonly generated by the API on a remote node. Some services may also communicate directly with a node or issue commands directly, such as for example the Hypervisor during system migration tasks. - [WebAPI]

The WebAPI provides a NodeJS based Web Socket interface for the Web Interface of the cluster. The WebAPI acts as a WebSocket to TCP proxy and provides access to the API via TCP. - [Web Server]

A web server (Apache/Nginx) provides access to the cluster web interface. A selected set of nodes may be denoted Management nodes and run these services.

Internal

Internal communications for a node.

- [Unix Sockets]

The majority of communication internally on a node is based on conventional Unix Sockets. Security for the sockets is based on Unix file system permissions, as well as a simple API key used for authorization. - [API / TCP]

The API provides a TCP based socket for communications, only accessible from localhost. To communicate with the API remotely the requests must be proxied via ether the Agent or WebAPI. Depending on the recipient and the command requested, the API will forward the request either locally on the same node via Unix Sockets, or to a remote node via the Agent interface.

Cluster Sync

Cluster synchronization have multiple forms depending on context. The Cluster service is both responsible for keeping the current state of the cluster (CDB, Cluster Data Base) locally on the node, as well as publishing state changes to other Cluster services via Multicast.

- [Internal Sync]

Internal sync relates to the state locally on the node. Services publishes their objects, metadata and state to the local CDB via Unix Sockets. - [Cluster Sync]

Cluster sync relates to keeping the state synchronized between the nodes in a cluster. In this context a cluster domain relates to any nodes within an L2 network domain. The Cluster service uses a multicast group to publish and keep track of state changes within a cluster domain. - [Intra Cluster Sync]

To synchronize state between clusters outside of an L2 domain some Cluster Services may be configured to act as L3 transports between clusters residing remotely or otherwise separated by an L3 network barrier.

Cluster Services

Services provide a feature or functionality in the cluster. These may be fully fledged services like the Hypervisor or Networking service, or specialized services like the Agent or WebAPI.

Services in the framework can conceptually be divided into three main categories;

- API and Management

- Core Services

- Supportive Services

API and Management

These services provide access or administration functionality in the cluster. Some services also acts as “glue” or ‘proxies’ for the framework.

- [Agent]

The Agent provides the main entry-point for a node. The Agent acts as a proxy and router between the external received TCP/IP TLS request and the internal Unix socket based structure. The Agent also provides an OpenSSL based certificate authentication mechanism, as well as an API KEY based authorization. - [API]

The API provides the glue for communication with as well as within the cluster. The API provides logic and abstraction for operations within the cluster. The API primarily exists in two forms; As a service and as libraries. Some services may use the API directly, while other may use program libraries that provides the functionality. The API provides an abstraction for core functionality and operations in the cluster. - [CLI]

The Command Line Interface provides a tool for managing the cluster. Primarily the CLI is used for debugging, for complex or otherwise functionality not exposed via the Web Interface. - [WEB]

The Web Interface provides a convenient and easy to use method to manage a cluster. Ideally all functionality that is required to manage a cluster on a day-to-day basis should be available via the Web interface.

Core Services

Core services provide functionality for the cluster. These are the services that does the work.

- [Hypervisor]

The hypervisor is the most essential service in the framework. The hypervisor manages the virtual machines and containers that run on the node. While the actual virtual machines are handled by a service called the VMM (Virtual Machine Manager), the Hypervisor is responsible for spawning the containers, initializing the networking, as well as keeping track of the state of the virtual machines and VMM containers while running. The hypervisor is also responsible for monitoring the statistics (cpu, memory, performance data, and so forth) of the node. - [Network]

The Network service handles the networking for the cluster, this is both establishing the underlying network cards, bridges, and virtual interfaces. Network also starts, stops and manages the virtual interfaces for virtual machines. Network also collects interface statistics and monitoring and statistics for virtual interfaces. - [Storage]

The Storage service provides storage management to the cluster. Storage also monitors storage devices and pools and monitors their state and metadata to the cluster. - [Framework]

The Framework is the service manager for the cluster. This service starts and stops the other services in the cluster, as well as manages and keeps track of their state. It is also the Frameworks responsibility to “boostrap” a cluster and start the required services during startup. The Framework is also used by the Hypervisor to spawn the VMM containers.

Supportive Services

Supportive services provide synchronization, monitoring and health checks in the framework.

- [Cluster]

The Cluster service, also known as the CDB (Cluster Data Base) keeps track of the current state of the node, this state is also synchronized to other Cluster service in the cluster. Local services running on a Node reports and fetches the state to the CDB, The Cluster publishes its state changes over Multicast to participating Nodes to ensure state synchronicity within a cluster domain. - [Monitor]

The monitor service monitors the state of the services running in the cluster, the Monitor is not exclusive to a specific node, but monitors all state data located in the Cluster Data Base (CDB). The monitor provides a Health Score of the cluster and may also generate alerts and alarms on the state of the cluster. There may be multiple Monitors in a cluster, one Monitor is designated the “primary”, which is responsible for alarm generation and publication. If the primary monitor fails, a vote between the secondaries will result in a new Monitor being elected Primary.

Objects

Cluster Objects are datatypes related to core elements of the cluster. Objects are fundamental types, and may also have other data types associated with them. Examples of such data elements may be metadata [meta] and statistics [stats].

Object Types

Each core element of the cluster is associated with an object type, these may be elements such as services, nodes, systems, storage, and so forth. Some Object types also have sub-types associated with them, an example of such an object is Storage, which may have a sub-type of “device” or “pool”. the Services object also has sub-types relating to the type of service it refers to; which may be “hypervisor”, “storage”, “network” and so forth.

- [System]

The System object contains data about a system. - [Node]

The Node object contains information about a Node. - [Storage]

Storage objects contain information about storage devices, the primary types of storage are either devices, pools or shares. - [Network]

The network elements contain information about Networks associated with a cluster. Networks may be multiple types, either conventional vlan bridges, trunks, as well as DPDK VPP vlan devices. Support for DPDK VPP trunk devices will be added in the future. - [Services]

The service object contains a number of sub-types relating to the different services that may run in a cluster; this includes hypervisor, storage, network, monitor, and so forth.

Object Metadata

Objects are also associated with a set of metadata. These are either [meta] which contain state data, or [stats] which contains statistics or other performance metrics for the respective object.

Services report both metadata and statistics to the CDB at short intervals, which are published to the cluster.

- [Metadata]

Metadata contains information about the state of an object. This may be considered “non-persistent” data as the data is not reflected in the object itself (which is persistent), but contains information about the current state of the object. Example of such metadata is last time the object was updated, what node and/or service updated the object, the state of the object (for systems this could be if the system is loaded or unloaded), and so forth. - [Statistics]

Statistics contain information about an object relating to its state or performance. For a System object such statistics may be amount of CPU or Memory consumed, the size of storage currently consumed, and so forth. For a Networking object such statistics may be number of packets transmitted or received.

As Metadata and Statistics contain information about Objects, and for many objects such data is related to multiple Nodes. By example, for a Network object all Nodes subscribing to a Network will publish their Statistics and metadata state to the respective objects, the statistics and metadata for some objects need to be handled separately by the Cluster Database (CDB).